In a world powered by amazing technology, Artificial Intelligence (AI) has become a game-changer. It’s transforming industries and personal experiences alike. But as I dived into the world of AI, I realized something important: before we dive in, we need to understand what AI is all about. This understanding became the key to my journey in recognizing just how crucial it is to grasp AI before diving in. In this article, I’ll share why this knowledge isn’t just handy, but downright essential.

In an era dominated by rapid technological advancements, Artificial Intelligence has emerged as a transformative force across various industries. From healthcare to finance, and from marketing to autonomous vehicles, AI is reshaping the way we live and work. However, with this surge in AI adoption comes the pressing need to address security concerns. In this article, we’ll delve into why prioritizing security when using AI is paramount for both individuals and organizations.

Protecting Sensitive Data

One of the most critical aspects of AI security is safeguarding sensitive information. AI systems often process vast amounts of data, ranging from personal identification details to confidential business records. A security breach can result in severe consequences, including financial loss, reputational damage, and legal liabilities. Implementing robust security measures is essential to ensure the protection of this valuable data.

Guarding Against Malicious Attacks

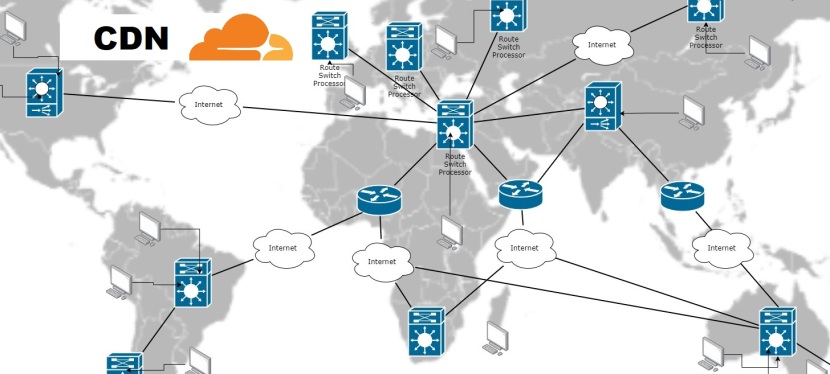

As AI systems become more integrated into our daily lives, they also become attractive targets for cybercriminals. Malicious actors may attempt to exploit vulnerabilities in AI models or manipulate them for nefarious purposes. This could lead to outcomes such as misinformation, financial fraud, or even physical harm in cases involving autonomous systems. Security measures like encryption, access controls, and regular vulnerability assessments are crucial in safeguarding against such attacks.

Ensuring Ethical Use of AI

Ethical considerations are paramount in the development and deployment of AI. Security measures play a significant role in upholding ethical standards. This includes preventing biased or discriminatory outcomes, ensuring transparency in decision-making, and respecting privacy rights. A secure AI system not only protects against external threats but also ensures that the technology is used responsibly and in accordance with ethical guidelines.

Mitigating Model Poisoning and Adversarial Attacks

AI models are susceptible to attacks aimed at manipulating their behavior. Model poisoning involves feeding deceptive data to the training process, which can compromise the model’s accuracy and integrity. Adversarial attacks involve subtly modifying input data to mislead the AI system’s output. By implementing security measures such as robust model validation and continuous monitoring, organizations can effectively mitigate these risks.

Building Trust and Confidence

Trust is a cornerstone of any successful AI deployment. Users, whether they are consumers or stakeholders within an organization, need to have confidence in the AI system’s reliability and security. Implementing comprehensive security measures not only protects against potential breaches but also fosters trust in the technology, driving greater adoption and acceptance.

Wrap-up

In an increasingly AI-driven world, the importance of security cannot be overstated. Protecting sensitive data, guarding against malicious attacks, ensuring ethical use, mitigating adversarial attacks, and building trust are all vital aspects of AI security. By prioritizing security measures in the development, deployment, and maintenance of AI systems, we can unlock the full potential of this transformative technology while minimizing risks and ensuring a safer, more reliable future. Remember, the benefits of AI can only be fully realized in an environment where security is a top priority.

Apologies, everyone!

I’ve been a bit MIA lately due to a time crunch, but that’s about to change.

Thanks again for returning to my blog!